Illustration by Rawand Issa for GenderIT.

Artificial intelligence (AI) technologies and tools are being adopted at an unprecedented pace throughout all sectors. But in the absence of ethical codes and regulatory frameworks that can guide the development and use of these technologies, this development comes with ethical, legal, and human rights concerns for users, especially vulnerable groups of society who face different types of biases based on gender, age, ethnic origin, religion, and political and sexual orientation.

Contrary to what is being promoted about AI, it is not “magic”, and does not have inherent intelligence. Rather, it is data mixed with statistics and human-created hypotheses to reach specific results. Through a wide range of such inputs, this class of people with power and authority, primarily male, decides the output that must be obtained. Therefore, this technology is neither immune nor exempt from projecting human biases.

This is consistent with what was documented in 2020 in the UNESCO Global Dialogue on Gender Equality in AI Technologies. Standard tools and principles of artificial intelligence that adequately address gender equality seem to have been non-existent until very recently, and the current procedures are not enough. Perhaps the problem of believing in “Enchanted Determinism,” that these are magical systems that can provide deep insights as solutions to dilemmas in miraculous ways, is the main reason for not acknowledging the existence of various forms of bias, discrimination, and racism in many of these technologies.

However, there are promising practices on how to address biases within AI systems that aspire to employ AI tools to contribute to actually addressing gender inequality. Although the AI community is still overwhelmingly male, there have been attempts by some researchers and companies in recent years to make it more welcoming for women and other underrepresented groups.

The Reality of latent Gender Justice in the Tech Landscape

According to the World Economic Forum, it will take another 132 years for the world to achieve gender equality in general. However, it is striking that, over the past two decades, an active gender gap has been observed with regard to women’s participation in access to education and employment in the science, technology, engineering, and mathematics fields (STEM). Studies show that women represent a minority in these fields, which are the cornerstone of AI science. Labour market experts attribute this absence of women to the increased burden that women bear with regard to differences in human capital, domestic responsibilities, and employment-related discrimination. According to UNESCO estimates, only 12% of AI researchers are women, and they “represent only 6% of software developers and are 13 times less likely to file information and communications technology (ICT) patents than men.” These percentages and numbers raise an important question: How does this gap in representation in technology and artificial intelligence affect society and women in particular?

To understand bias in AI, it must be mentioned that biases are not the result of a sudden moment, but rather appear in several stages of artificial intelligence systems, from the algorithm development process, to the dataset training phase, to how they handle the decision-making.

To understand bias in AI, it must be mentioned that biases are not the result of a sudden moment, but rather appear in several stages of artificial intelligence systems.

As AI relies on the endless amount of data available online to learn from and iterate, it risks reproducing and perpetuating societal biases. This is evident in many branches of artificial intelligence such as natural language processing (NLP), for instance the ChatGPT model, where “word embedding” can lead to linguistic biases resulting from gender, ethnic, or societal discrimination. Some AI technologies also reflect societal biases with regards to recruitment. Amazon had to scrap the recruiting tool that was intended to streamline the recruitment process in the human resources department after it became apparent that the AI algorithm favoured male candidates. Furthermore, discrepancies in health data available in favour of men could make medical applications that use artificial intelligence less accurate for women, which means actual risk to their health.

In the following, we shed some light on the most significant of these risks:

Harassment and violation of privacy

Artificial intelligence systems are already being used to create fake, non-consensual photos and videos of women, exposing them to harassment and privacy violations. These fake photos and videos, popularly referred to as deepfakes, are used to publish offensive, sexual, or misleading content, thereby causing significant negative psychological and social effects in women.

Perhaps the unprecedented speed in the development and commercialising of these technologies is what increases the misuse of AI in the form of deepfakes as, in the past, the deepfake process was technologically complex and time-consuming, but now it can be done for just a few dollars, or even for free on some applications and programs.

This problem is exacerbated by the existing issues related to gender based violence and absence of accountability coupled with the lack of knowledge in society about these tools and the dimensions of their misuse. Various reports have also shown that the vast majority of deepfakes on the Internet are pornographic videos targeting women.

Reality of Bias and Discrimination

Built-in biases in AI systems raise the alarm of deepening and rooting existing biases against women and gender and sexual minorities, as these systems are trained on data that may contain sexual, racial, or social prejudice. This can lead to discrimination these communities in important fields such as employment, loans, or criminal justice, thereby negatively affecting their opportunities and rights.

An investigation by The Guardian revealed that algorithms used by social media platforms decide what content to promote and what content to suppress, in part based on the extent of the sexual suggestiveness of the photo. The more “sexually suggestive” the photo is, the less likely it is to be seen by the public. According to the investigation, it was found that AI algorithms mark photos of women in daily life situations as such and classify more photos of women as “racy” or sexual than similar photos of men.

This problem is exacerbated by the existing issues related to gender based violence and absence of accountability coupled with the lack of knowledge in society about these tools and the dimensions of their misuse.

As a result, social media companies have suppressed the reach of countless photos that feature women’s bodies and harmed women-led businesses—leading to increasing societal inequalities, even as far as affecting medical services.

AI algorithms were tested on the image below released by the US National Cancer Institute that shows how a clinical breast examination is performed. Google classified this image as having the highest score for racism, and Microsoft’s AI was 82% confident that the image was “of a sexually explicit nature” even though it is nothing more than a shot of the patient’s cancer screening.

Microsoft’s AI was 82% confident that this image demonstrating how to do a breast exam was ‘explicitly sexual in nature’, and Amazon categorised it as ‘explicit nudity’. Photograph: National Cancer Institute/Unsplash

This problem is just the tip of the iceberg for women navigating AI algorithms with a built-in gender bias.

To address this, tech companies should thoroughly analyse their data sets to include more diversity, as well as a more diverse team that can pick up on nuances. Companies should also check that their algorithms work similarly on images of men versus women and other groups.

In addition to gender bias, AI faces challenges related to race, which has alarming implications for black and brown people. An example of this is facial recognition algorithms studied by Joy Buolamwini, the founder of the Algorithmic Justice League, who found that the share of input images used to train facial recognition algorithms was 80% white people and 75% male faces. As a result, the algorithms have a high accuracy of 99% in detecting and diagnosing skin cancer among men and lighter-skinned people, while the system’s ability to recognize black women was much lower at 65% of cases.

Generative AI and Biases

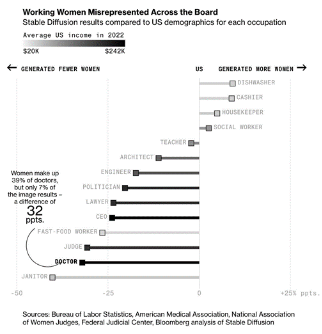

Along the same lines, another investigative effort looked at the role of generative AI models in exacerbating racist and gender stereotypes against women of colour. The research team asked Stable Diffusion, the world’s largest open-source platform for AI-generated images to generate thousands of worker images for 14 jobs and 3 crime-related categories. The results of racial and gender bias were clear. Women and dark-skinned people were the highest percentage in low-paying jobs. Over months of examining thousands of images, it was found that not only did the AI model repeat or mirror real-world stereotypes and disparities, it also amplified and reinforced them.

Sources: Bureau of Labor Statistics, American Medical Association, National Association of Women Judges, Federal Judicial Center, Bloomberg analysis of Stable Diffusion

While 34% of American judges are women, only 3% of the generated images for the word “judge” were women. Among fast-food workers, the model produced 70% dark-skinned people, even though 70% of fast-food workers in the US are white.

Experts would argue that one promising way to ensure that historical gender biases are not amplified and projected into the future is to increase intellectual diversity through the number of women working in technology.

It may, for a while, seem that the gender gap in data does not clearly and seriously threaten women’s lives, and that these are just disparities that can be overcome here and there. However, the expansion of the design and use of artificial intelligence models in various industries and with this number of biases can greatly harm women’s lives and livelihoods. Although it is agreed that plenty of “good” data can indeed help bridge gender gaps, there are still concerns that if the “right” questions are not asked in the data collection process (including by women), gender gap can widen when algorithms are misinformed.

Experts would argue that one promising way to ensure that historical gender biases are not amplified and projected into the future is to increase intellectual diversity through the number of women working in technology. Only 22% of AI professionals globally are women compared to 78% male, according to the World Economic Forum. At eight big tech companies, Bloomberg found that only 20% of technical roles are held by women. As for Facebook and Google, less than 2% of technical roles are held by black employees, and tech organisations are not only recruiting fewer women than men, but they are also losing them at a faster rate. Globally, women only represent 25% of workers in science, technology, engineering, and mathematics (STEM), and only comprise 9% of leadership in these areas, according to Boston Consulting Group (BCG).

Perpetuating the Gender-Based Cultural Stereotyping

It is not enough to merely include women. We must also examine how gender-based cultural stereotypes may be reflected in the design and development of AI applications.

The “feminization” of AI tools reinforces negative gender stereotypes by focusing on traditional gender roles through voice and appearance, as is the case with home voice assistants (AI) such as Amazon’s Alexa, Microsoft’s Cortana, and Apple’s Siri, which by default have female voices.

The attempt to “humanise” virtual assistants and robots as feminine is deeply problematic, as, for example, the hardware and software design of humanoid robot “Sophia”. These female-stereotype designated codes came across the same harassment and violence women face in real life. Alexa’s developers, for example, had to contend with this problem by activating the “separation mode” as a result of robots being harassed by men.

AI Biases in the Middle East

Biases in artificial intelligence tend to exacerbate as we get closer to the Middle East. For example, when prompting generative AIs like Midjourney for images of a terrorist persona, the result shows Middle Eastern facial features.

As an AI ethics researcher, I understand the challenges of studying the impacts of bias in AI systems in the Middle East. Indeed, there is a significant lack of comprehensive studies and research shedding light on this particular critical issue. The scarcity of relevant data and research might hinder a comprehensive understanding of the implications of this bias and impede efforts to develop more inclusive and equitable AI systems tailored to the unique cultural and social context of the region. To address this issue, it is crucial for researchers, institutions, and policymakers to prioritise and invest in conducting in-depth studies and research on AI ethics, including the examination of biases affecting the Middle East. Such efforts can help identify and mitigate the impacts of biases in AI systems, promote transparency and accountability, and ensure the development of ethical and responsible AI technologies for the region and beyond.

Countering Biases in Artificial Intelligence

The first tool of treatment is diagnosis. Therefore, it is important to identify the areas in which gender-based bias in AI is evident.

The “feminization” of AI tools reinforces negative gender stereotypes by focusing on traditional gender roles through voice and appearance, as is the case with home voice assistants.

It is also necessary to shed light on who defines the algorithms and instructions that artificial intelligence follows, because technology reflects the underlying beliefs and ideas of the programmers. Moreover, the problem of insufficient representation is one of the most important issues that require attention, as the field of artificial intelligence suffers from a lack of representation of women in research, development, and decision-making. Studies also point to the unique challenges facing women in STEM professions, as half of women scientists experience sexual harassment in the workplace globally. Therefore, along with increasing the participation of women in STEM professions (including in the field of AI), employers must develop support structures to deal with these challenges and develop zero-tolerance policies on gender-based violence in the workplace as well as a means to monitor and enforce these policies.

To protect women and reduce these risks, there should be strong guidelines and a legal framework regulating the use of AI. It also requires promoting awareness and training on the ethical and responsible use of technology as well as ensuring adequate representation of women in decision-making and technological development.

Perhaps one of the most important and effective solutions is the adoption of “Ethical AI” by adopting an approach involving different elements when dealing with issues related to gender, race, ethnic origin, socio-economic status, and other factors, in addition to an approach based on the protection of human rights in the management of artificial intelligence that centres on transparency, accountability, and human dignity. In order to achieve the above, various parties including technology companies, academics, United Nations entities, civil society organisations, mass media, and other stakeholders should collaborate to explore common solutions to women’s problems within AI technologies.

The UN Secretary-General’s proposal for a global digital code, which is to be agreed upon at the Future Summit in September 2024, is a step in the right direction, but it is not sufficient because such a code must also address the potential gender biases perpetuated by AI and the solutions to address this. To make AI ethical and a vehicle for the greater good, it must eliminate any explicit and implicit biases, including those related to gender. Therefore, the importance of incorporating inclusive and diverse datasets during AI training cannot be overstated. This entails moving beyond solely relying on white men to train algorithms and actively involving individuals from diverse backgrounds, such as people of color, women, and other gender-diverse individuals, in the AI development process.

In conclusion, to ensure a secure future for artificial intelligence, we need practical tools to address biases in AI against women, including ongoing awareness campaigns, improving data collection, self-auditing algorithms, promoting diversity in the technology industry, adopting ethical AI principles, and enhancing communication and partnership among all stakeholders.

- 3399 views

Add new comment